Balancing datasets using Crucio Safe-Level-SMOTE

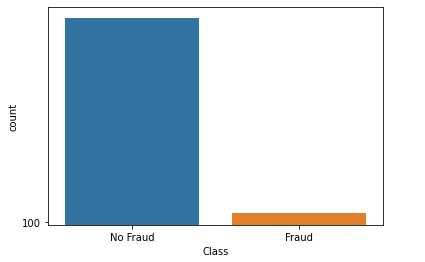

Unbalanced data

In simple terms, an unbalanced dataset is where the target variable has more observations in one specific class than the others. An example can be a dataset for transactions: usually, 99% of this set consists of simple, not fraudulent transactions, and only 1% of them are fraud.

What is SLS and how does it work?

Based on SMOTE, Safe-Level-SMOTE assigns each positive instance its safe level before generating synthetic instances. Each synthetic instance is positioned closer to the largest safe level so all synthetic instances are generated only in safe regions. The safe level computes by using nearest neighbor minority instances and is defined as:

safe level (SL) = the number of positive instances in k nearest neighbors.

If the safe level of an instance is close to 0, the instance is nearly noise. If it is close to k, the instance is considered safe. The safe level ratio is defined as the formula:

safe level ratio = sl of a positive instance / sl of the nearest neighbor.

It is used for selecting the safe positions to generate synthetic instances. By synthesizing the minority instances more around the larger safe level, we achieve a better accuracy performance than SMOTE.

Using Crucio SLS

In case you didn’t install Crucio yet, then use in the terminal the following:

Now we can import the algorithm and create the SLS balancer.

sls = SLS()

balanced_df = sls.balance(df, 'target')

The SLS() initialization constructor can contain the following arguments:

- k (int > 0, default = 5) : The number of nearest neighbors from which SMOTE will sample data points.

- seed (int, default = 45): The number used to initialize the random number generator.

- binary_columns (list, default = None): The list of binary columns from the data set, so sampled data be approximated to the nearest binary value.

The balance() method takes as parameters the panda’s data frame and the name of the target column.

Example:

So I chose a data set where we have to predict the type of a Pokemon (Legendary or not), the Legendary class constitutes 8% out of all dataset, so it is an imbalanced dataset.

We recommend you, before applying any module from Crucio, to first split your data into train and test data sets and balance only the train set. In such a way you will test the performance of the model only on natural data.

The basic Random Forest algorithm gives an accuracy of approximately 96% by training on imbalanced data, so now it’s time to test out SMOTE algorithm.

new_df = sls.balance(df,'Legendary')

After balancing data, we increased accuracy from 96% to 99%.

Conclusion:

SLS is an interesting technique that tries to improve the popular SMOTE algorithm, so I encourage you to test it and see the difference with some other balancing methods from Crucio such as SMOTETOMEK, SMOTEENN, ADASYN, and ICOTE.

Thank you for reading!

Follow Sigmoid on Facebook, Instagram, and LinkedIn:

https://www.facebook.com/sigmoidAI

https://www.instagram.com/sigmo.ai/

https://www.linkedin.com/company/sigmoid/