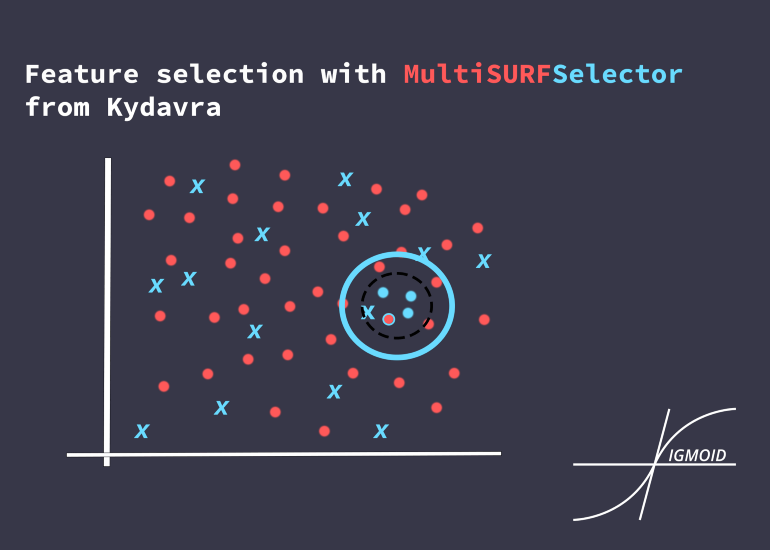

Feature Selection with MultiSURFSelector from Kydavra

Feature selection is a really important task when it comes to complex data. One of the methods that could help us deal with it is the MultiSURF algorithm, that is a Relief-based Algorithm (RBA) — an individual evaluation filter method.

The MultiSURF algorithm is using the Relief algorithm but is differentiated by creating a threshold that defines the mean pairwise distance between the target instance and all others being opposed to the mean of all instance pairs in the data. Thus it has a bigger chance to select the best features

Using MultiSURF from Kydavra library

You will need to install Kydavra first for the MultiSURF magic to happen :

In case it is already installed, do not forget to give it an update :

Next, we will need to import the model, create the selector, and then apply it to our dataset :

msrf = MultiSURFSelector()

selected_cols = msrf.select(df,'target')

The select function is taking the dataframe and the target column as parameters . The selector itself — MultiSURFSelector — takes the next parameteres:

- n_neighbors ( int, default = 7): The number of neighbors to consider when assigning feature importance scores.

- n_features ( int, default = 5): The number of top features (according to the relieff score) to retain after feature selection is applied.

In order to search for the best solution that this selector is coming up with, feel free to experiment by changing the value of these parameters.

Here comes the example:

We will test the performance on the Heart Disease UCI dataset.

We will use Logistic Regression for the next features :

We get the accuracy score equal to 0.7973568281938326

After using the MultiSURFSelector , with 9 features to keep, we get the following features:

Re-applying the Logistic Regression, but this time using those features, we will get the accuracy score 0.8264462809917356, and it ain’t much, but it’s honest work.

Conclusion

Using MultiSURFSelector can greatly improve your feature selection task, and thus it can improve the accuracy score that you are so striving for.

Made with ❤ by Sigmoid.

If you tried kydavra we invite you to share your impression by filling out this form.

Useful links:

Follow us on:

- https://www.facebook.com/sigmoidAI

- https://www.instagram.com/sigmo.ai/

- https://www.linkedin.com/company/sigmoid/