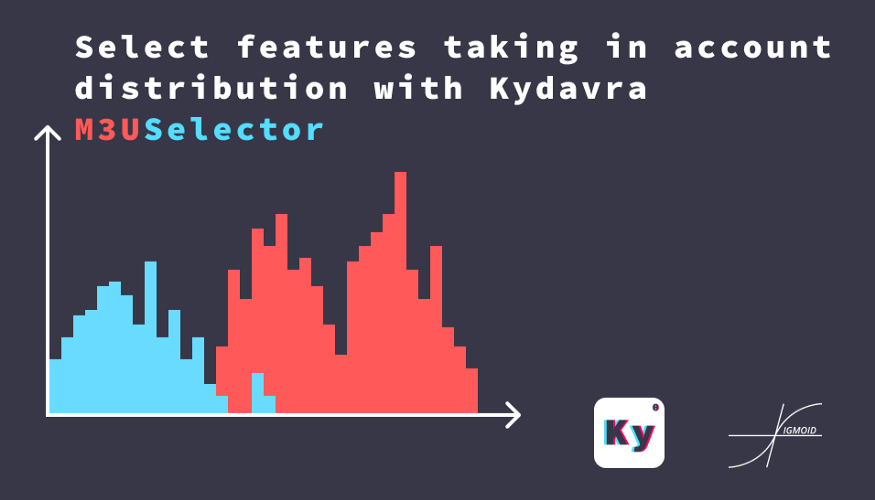

Select features taking in account distribution with Kydavra M3USelector

In the last article, we discussed the MUSESelector. This kydavra selector performs feature selection based on a data frame. The biggest drawback of this method is that it is good only for binary classification problems. There comes into play an extension of this method — M3U — (Minimum Mean Minimum Uncertainty), implemented in kydavra as M3USelector, for multiclass classification.

Using MUSESelector from Kydavra library.

If you still haven’t installed Kydavra just type the following in the following in the command line.

pip install kydavra

If you already have installed the first version of kydavra, please upgrade it by running the following command.

pip install --upgrade kydavra

Next, we need to import the model, create the selector, and apply it to our data:

from kydavra M3USelector

m3u = M3USelector()

selected_cols = m3u.select(df, 'target')

The select function takes as parameters the panda’s data frame and the name of the target column. The M3USelector takes the following parameters:

- num_features (int, default = 5) : The number of features to retain in the data frame.

- n_bins (int, default = 20) : The number of bins to split data in for numerical features.

We strongly recommend you to experiment only with the num_features parameter and let the others with the default settings.

Let’s see an example:

Now we are going to test the performance of the MUSESelector on the Glass Classification data set.

To find the best solution we should iterate throw all possible number of feature numbers, from 1 to 9 in this data set.

for i in range(1, 10):

m3u = M3USelector(num_features=i)

cols = m3u.select(df, 'Type')

X = df[cols].values

y = df['Type'].values

print(f"{i} - {np.mean(cross_val_score(logistic, X, y))}")

The result is the following:

1 - 0.47220376522702107

2 - 0.48604651162790696

3 - 0.5750830564784052

4 - 0.560908084163898

5 - 0.6121816168327796

6 - 0.5796234772978959

7 - 0.6308970099667774

8 - 0.6355481727574751

9 - 0.6262458471760797

As we can see we got a better accuracy when only 8 features remained in the table. However, we strongly recommend you try out some more feature processing techniques to get more accuracy.

If you used or tried Kydavra we highly invite you to fill this form and share your experience.

Made with ❤ by Sigmoid.