Using Crucio SMOTE and Clustered Undersampling Technique for unbalanced datasets

Unbalanced data

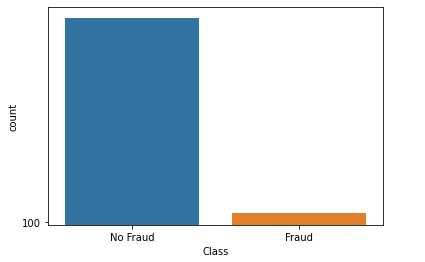

In simple terms, an unbalanced dataset is one in which the target variable has more observations in one specific class than the others. An example can be a dataset for transactions: usually, 99% of this set consists of simple, not fraudulent transactions, and only 1% of them are fraud.

How does SCUT work?

SMOTE and Clustered Undersampling Technique (SCUT) uses the Expectation Maximization (EM) algorithm. The EM algorithm replaces the hard clusters with a probability distribution formed by a mixture of Gaussians. Instead of being assigned to a particular cluster, each member has a certain probability to belong to a particular Gaussian distribution of the mixture. The parameters of the mixture, including the number of Gaussians, are determined with the Expectation Maximization algorithm. An advantage of using EM is that the number of clusters does not have to be specified beforehand. EM clustering may be used to find both hard and soft clusters. That is, EM assigns a probability distribution to each instance relative to each particular cluster.

- For all classes that have some instances less than the mean m, oversampling is performed to obtain a number of instances equal to the mean. The sampling percentage used for SMOTE is calculated such that the number of instances in the class after oversampling is equal to m.

- For all classes that have a number of instances greater than the mean m, undersampling is conducted to obtain a number of instances equal to the mean. Recall that the EM technique is used to discover the clusters within each class Subsequently, for each cluster within the current class, instances are randomly selected such that the total number of instances from all the clusters is equal to m. Therefore, instead of fixing the number of instances selected from each cluster, we fix the total number of instances. It follows that a different number of instances may be selected from the various clusters. However, we aim to select the instances as uniformly as possible. The selected instances are combined to obtain m instances (for each class).

- All classes for which the number of instances is equal to the mean m are left untouched.

Using Crucio SCUT

In case you didn’t install Crucio yet, then use in the terminal the following:

Now we can import the algorithm and create the SCUT balancer.

scut = SCUT()

balanced_df = scut.balance(df, 'target')

The SCUT() initialization constructor can contain the following arguments:

- k (int > 0, default = 5) : The number of nearest neighbors from which SMOTE will sample data points.

- seed (int, default = 45): The number used to initialize the random number generator.

- binary_columns (list, default = None): The list of binary columns from the data set, so sampled data is approximated to the nearest binary value.

The balance() method takes as parameters the panda’s data frame and the name of the target column.

Example:

So I chose a data set where we have to predict the type of a Pokemon (Legendary or not), the Legendary class constitutes 8% out of all dataset, so it is an imbalanced dataset.

We recommend you, before applying any module from Crucio, to first split your data into train and test data sets and balance only the train set. In such a way you will test the performance of the model only on natural data.

The basic Random Forest algorithm gives an accuracy of approximately 92% by training on imbalanced data, so now it’s time to test out SMOTE algorithm.

new_df = scut.balance(df,'Legendary')

After balancing data, we increased accuracy from 92.5% to 97.5%.

Conclusion:

SCUT is a very good technique to balance datasets, it uses undersampling based on clusters which can be very powerful sometimes, so I encourage you to test it and many other balancing methods from Crucio such as SMOTETOMEK, SMOTENC, SLS, ADASYN, ICOTE.

Thank you for reading!

Follow Sigmoid on Facebook, Instagram, and LinkedIn:

https://www.facebook.com/sigmoidAI

https://www.instagram.com/sigmo.ai/

https://www.linkedin.com/company/sigmoid/